SmartViewer

About This Space

This workspace implements Watson Visual Recognition. The application uses your device camera to capture images and send them up to the Watson Visual Recognition to tell you what it sees.

Last updated on April 4, 2019

Forked from: /alex/watson-age-code/

Public Permissions: View Run Comment

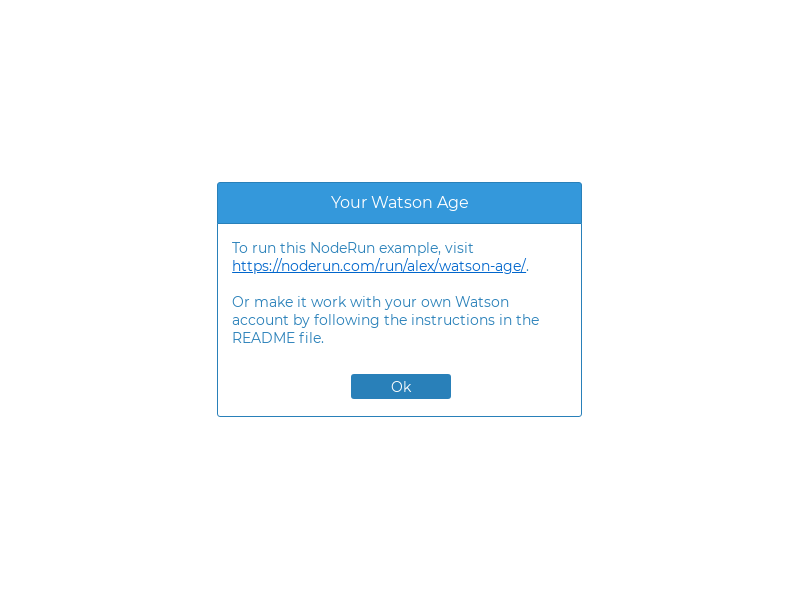

What is your Watson Age?

This workspace implements Watson Visual Recognition. The application uses your device camera to take your photo, determine how old you look, and tell you all about it using human speech though the speakers on your device.

Making it work

First, create your own space:

- Click Fork on the ribbon to make a copy of the original space

- Click Update on the ribbon to update public permissions. To keep your API key private, the Open and Modify permissions should be unchecked.

Next, create a Watson Visual Recognition resource: - Sign up for an account at cloud.ibm.com, if you don’t already have one - Click create resource and select Visual Recognition - Create service credentials and copy the API key into your credentials.json file on NodeRun.com

Finally, you’re ready to launch your own copy of the app. You can either use the Launch menu or simply use your phone, tablet, or a laptop with a camera to browse to the run URL for your new workspace.

Packages Used

The following packages are used by this space: - watson-developer-cloud – connects to IBM Watson - dataurl - converts camera snapshot to appropriate format for Watson

Select Server --> Install npm Packages to review the packages that are installed.

The code

The server-side code for this application is in main.js. It uses the Node.js require() keyword to bring in functionality from external packages.

- main.js refers to display.json, a Rich Display File created in the Visual Designer Tool

- display.json specifies public/client-scripts.js using the external javascript screen level property

- client-scripts.js contains client-side code that the browser uses to do things like start the camera, display the results, and use speech to explain the results

More Spaces By Ajay Gomez (@ajaygomez1)

1636

0

0

1621

0

0

A Hello World app with both dynamic output and input fields. Also illustrates the basics of animation.

1512

0

0

Be the first to comment:

Comments